- This assignment is regarding to Binocular Stereo Model using Shirai algorithm.

- Stereo vision can be seen as vision based on two cameras or one moving camera.

In the process at least two images are used to make comparisons between them. They are

processed line by line and it's assumed that corresponding pixels are in the same row

in both images (which were taken at the same time and have the same size), also known as

the standard stereo geometry. That means they the images have probably to be adjusted

prior to the operation of the Shirai algorithm.

The general approach is performed in two stages. First, a edge image is computed to have some distinct points to search for. In a constant image it would be without sense to detect any disparities which will only be visible if edge can be seen. In a second step the algorithm tries to find corresponding points in the right image to edge points of the left image. If a correspondence is found then it's supposed that this is the result of the same object point representing in the left and right image. Finding this is known as the correspondence problem. The difference in position between corresponding points in the images is known as the disparity of the images.

- Implementation:

Calculating corresponding image points

and a disparity map for pairs of gray value stereo images: The standard stereo

geometry is assumed for the given images, i.e. it is sufficient to restrict the

search for a corresponding point on the identical row in the second image.

Furthermore it is assumed that he search always starts at an edge pixel only (see above).

And, a row equality y=y(left)=y(right) is satisfied in case

of ideal assumption of the standard stereo geometry.

Shirai algorithm determines a point q in the right image as a corresponding image point

to point p in the left image where the similarity measure takes a global

minimum within the search interval, and this distinct global minimum must be smaller or

equal to an a-priori specified threshold d1. If such a global minimum<=d1,

does not exist within the search interval then it has to be decided

whether to continue the search: if all of the values SIMILARITY (p,q) are

greater or equal to an a-priori specified threshold d2>d1, then the

search is at this position is stopped and the next edge pixel p in the left edge image

is selected for the initialization of a further search process.

In the other case (there are SIMILARITY values between d1 and d2), the search process is

modified: The interval is examined for a possible reduction: All points q at the

boundary of the search space are excluded for which it holds SIMILARITY (p,q)>=d3,

(d3 is a prior specified threshold>=d2). Then the search goes on as

previously described.

One channel images were processed as they are. Former colour images were given in single

images, each for one channel R,G,B. We processed them colour channel by colour channel,

and determined then if at least in one image a disparity at a image point (x,y) was found.

It's then written to the result images.

- Performance of the operator:

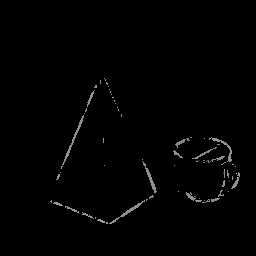

Represents resulting disparity maps( defined values exist only at edge positions)

for the stereo pair of image. Disparity is equal to |xleft-xright|= xleft - xright;

The disparities are gray value encoded: the darker it is the large the disparity value,

i.e. the closer the surface point to the camera). On the left a scalar image of a

separate color channel( of the left image of the stereo pair) is represented, and on

the right the encoded disparity map is shown as calculated for this channel.

We use different d1, d2, d3 to compare the outputs. When we use d1=5..10, we

got a more clear pictures. This was invariant to a change of d2 and d3. Thus we set

d3=d2 as it was recommended by Prof.Klette in the lecture.

Here is the output of our program using different d1,d2 and d3 (set bold in the captions):

./ass2shirai 1 source\ BMP/complex results\ JPG/complex_01_05.jpg 1 5 5 0.3

./ass2shirai 1 source\ BMP/complex results\ JPG/complex_01_10.jpg 1 10 10 0.3

./ass2shirai 1 source\ BMP/complex results\ JPG/complex_05_10.jpg 5 10 10 0.3

./ass2shirai 1 source\ BMP/complex results\ JPG/complex_10_20.jpg 10 20 20 0.3

./ass2shirai 1 source\ BMP/complex results\ JPG/complex_10_50.jpg 10 50 50 0.3

./ass2shirai 1 source\ BMP/complex results\ JPG/complex_20_50.jpg 20 50 50 0.3

./ass2shirai 1 source\ BMP/complex results\ JPG/complex_30_50.jpg 30 50 50 0.3

./ass2shirai 3 source\ BMP/tuch results\ JPG/tuch_05_20.jpg 5 20 20 0.3

./ass2shirai 3 source\ BMP/tuch results\ JPG/tuch_05_20.jpg 5 20 20 0.3 ./ass2shirai 3 source\ BMP/tuch results\ JPG/tuch_10_20.jpg 10 20 20 0.3

./ass2shirai 3 source\ BMP/tuch results\ JPG/tuch_10_20.jpg 10 20 20 0.3 ./ass2shirai 3 source\ BMP/tuch results\ JPG/tuch_20_50.jpg 20 50 50 0.3

./ass2shirai 3 source\ BMP/tuch results\ JPG/tuch_20_50.jpg 20 50 50 0.3 ./ass2shirai 3 source\ BMP/tuch results\ JPG/tuch_30_50.jpg 30 50 50 0.3

./ass2shirai 3 source\ BMP/tuch results\ JPG/tuch_30_50.jpg 30 50 50 0.3 ./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_1_05.jpg 1 5 5 0.3

./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_1_05.jpg 1 5 5 0.3 ./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_01_50.jpg 1 50 50 0.3

./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_01_50.jpg 1 50 50 0.3 ./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_10_50.jpg 10 50 50 0.3

./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_10_50.jpg 10 50 50 0.3 ./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_20_50.jpg 20 50 50 0.3

./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_20_50.jpg 20 50 50 0.3 ./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_30_50.jpg 30 50 50 0.3

./ass2shirai 1 source\ BMP/pyrtasse results\ JPG/pyrtasse_30_50.jpg 30 50 50 0.3

- Shirai's algorithm has certain drawbacks, i.e. areas at the image

border which are nearer to the current point of search as half of the window size

can't be processed, because then the algorithm wouldn't find certain pixels (because

they are beyond the image's border and couldn't be read.

Shirai's algorithm is time and resource intensive, even if it calcullated disparities just for edge points. Noise images without a prior smoothing could extent the running time heavily, so a smooting and median could be advantegous.

The implementation of the algorithm depends heavily on the datastructures used. We experienced that fact our own.